Introduction

Every business today wants to talk about AI, predictive analytics, and a “future driven by data-fueled decisions”. That’s the shiny front-end promise. But the reality is far less glamorous and far more critical. None of it works without a disciplined data engineering lifecycle.

We have passed the point where analysts simply pull raw dumps into a spreadsheet, and yet, many stakeholders still insist on having a big, shiny ‘Export to Excel’ button. In most cases, the volume, velocity, and complexity of modern data streams demand an industrial-grade process to move data from messy sources to clean, reliable intelligence.

The path from a raw log file to a production-ready AI model is a rigorous, multi-stage journey – from ingestion to transformation to orchestration – all underpinned by governance and security. Get any one stage wrong, and the entire analytics house of cards collapses (very often leaving a hefty bill for cloud computing expenses).

Let's cut through the hype and focus on how a solid engineering lifecycle can power your goals.

Put strategy before tools…

A CTO or Head of Engineering gets cornered by the board, who have a new mandate from on high telling them they need to do something with AI. The executive, squeezed for a quick answer, turns to the first generative AI tool they can find and asks, “What can I do with AI?”

The answer that comes back is “buy Databricks.”

You can laugh, but this is happening. We have a generation of brilliant technology leaders, especially in established fields like manufacturing, who are now expected to be AI innovators overnight. For years, their world was process automation and generating reports. Now, they’re being asked to pull a rabbit out of a hat, and they’ve fallen out of the loop on the latest tech.

This creates the perfect opening for vendors like Snowflake and Databricks, who are practically kicking down the door to sell their platforms. For the cornered executive, picking a big, familiar name is the easy way out. The board is happy, a decision is made, and the problem is considered solved – for now. This is the fundamental problem.

The real work begins by pausing to ask what we want to achieve from a business perspective.

This is where we need to think like product designers. We must be intentional about shaping a user's entire interaction with a system to be natural and effective.

A board member who wants to know how the company is doing can get a daily email with a screenshot of a dashboard. But does anyone actually read that email anymore? After the fifth identical-looking report, our brains start to tune it out. We’re drowning in information, and a daily data dump just becomes noise.

The alternative is being able to actually point to the really interesting areas or, if you will, what’s now being called a "System of Intelligence." Instead of a static report, the system itself uses AI to generate a specific, timely alert. It doesn’t just show you the whole dashboard; it points to the one metric that’s out of line and tells you to pay attention. This is how you deliver value.

This shift requires business leaders to stop and articulate what they actually need. It’s the same conversation I’ve had a hundred times. They start by saying, “We definitely need real-time data.” But when you dig in, you find that updating the data once an hour, or even once a day is more than enough. That small distinction is the difference between an efficient system and one that racks up astronomical infrastructure costs for no good reason.

What’s the morale of the story? Focus on the problem first, then choose the right tool – don’t get distracted by shiny tools before you know what you need.

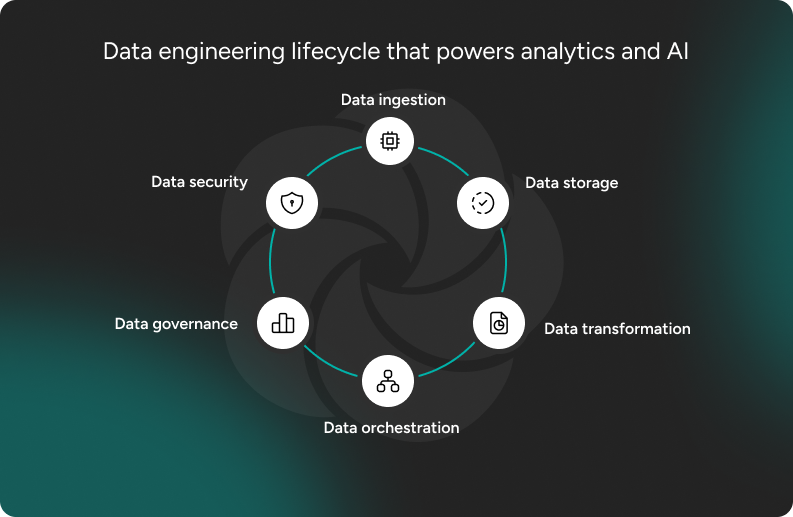

How data engineering lifecycle powers analytics and AI

Data ingestion

To build a data pipeline that works, you have to start at the end. The first question isn't "How do we get the data?" but "What business outcome are we trying to achieve?" The answer dictates every technical decision that follows, right down to the ingestion layer.

Let's use a real-world example. A client of ours monitors server farms where overheating is a constant risk. The obvious goal is to predict and prevent failures. An engineer might immediately think, "We need a streaming pipeline to ingest temperature data every second." That sounds impressive, but it could be a colossal waste of money.

The critical question is, what’s the return on that investment? If a server fails only once a year, building a complex, expensive real-time system is overkill. But this particular client’s revenue is tied directly to server uptime. For them, a handful of servers fail every day. Over a year, that’s thousands of lost machines and a significant hit to their bottom line. At this organization, the cost of an advanced monitoring platform is essential. Even a 2% improvement in server uptime provides a massive financial return.

This is how the data engineering lifecycle powers real results. The business case defines the technology, not the other way around. The client’s clear ROI justifies a near real-time ingestion strategy. For the company whose servers rarely fail, a simple, slow batch process that runs every few hours would be a much smarter choice.

A useful rule of thumb I heard at the ODSC conference helps frame these decisions (though it should be taken with a grain of salt). If an AI or automation project offers a 10x improvement, it’s a no-brainer. At 5x, it’s a powerful tool for augmenting human decision-making. But if the potential gain is only 2x, you’re likely building an expensive experiment.

Without this business-first, ROI-driven approach, data engineering is just a set of pipes. It might be technically elegant, but it delivers no value. When you work hand-in-hand with product and business leaders to understand the why, you build systems that don’t just move data – you build systems that move the needle.

Data storage

From a data engineer’s perspective, it’s tempting to think of it as “just putting data somewhere.” But it deserves much more attention – it’s the foundation that determines cost, performance, and ultimately how useful your analytics and AI initiatives will be.

Where should the data live? There’s no one-size-fits-all answer. Between databases, warehouses, and lakes, each option comes with trade-offs. For example, pushing everything into a warehouse might feel clean and modern, but as unstructured data grows, that setup can quickly balloon your infrastructure costs.

Knowing when to use a data platform – and when to hold off

As mentioned, some companies jump headfirst into platforms like Snowflake or Databricks because they’re drawn to the brand names, only to realize later they’re dealing with just a few dozen gigabytes of data. This happens more often than you'd think for customers whose IT concentrates mainly around their ERP system.

For datasets that fit comfortably in memory (tens of GB), local processing can sometimes outperform a cloud warehouse once orchestration/IO overheads are considered. The real challenges (and the real opportunities) start when your data doesn’t fit in memory anymore. That’s when decisions about how you store and organize it start to matter.

Cost and access patterns are another critical piece. Not all data is equal, and treating it as if it were is a recipe for waste. Cloud providers like AWS offer multiple storage tiers – from instant access down to archival layers where pulling data might take an hour but costs a fraction of the price. That’s perfect for backups or compliance scenarios where you rarely need the data. But when you do, it’s still there. Using these tiers intelligently can save significant money without sacrificing business needs.

And then there’s the question of format. This is where storage gets genuinely interesting. The way you write data to disk – row by row, column by column, with or without metadata – directly shapes how quickly you can query it and how much compute you burn in the process.

In fact, at STX Next, apart from being Snowflake and Databricks partners, we excel in using Apache Iceberg for exactly this reason. Iceberg is a table format for big data that makes analytical queries drastically more efficient by storing both the data and the metadata in a way that minimizes unnecessary reads. Instead of scanning hundreds of files to answer a single question, you might only need to touch two. In cloud environments where you pay for every read, that efficiency translates directly into savings.

So, “storage” is not only about keeping data safe, but also about shaping the economics and effectiveness of everything that happens downstream. In the right setup, your analysts and data scientists work faster, cheaper, and smarter. Get it wrong though, and you’re building a very expensive waiting room for data that never delivers value.

Data transformation

Transformation is arguably the most important stage because it dictates both data quality and business value. It’s the engine that converts inert records into potent information capable of driving significant business impact.

But let's be blunt, transformation is also expensive. Storage is cheap; computation is not. The process consumes valuable compute resources – distributed arrays of machines that process data primarily in memory (RAM). To avoid costly bottlenecks and system backpressure, you must engineer transformations to execute as fast as possible.

Cleaning, validating, and normalizing

The core of transformation involves data cleaning and validation. Sometimes, cleaning is a clever game of fill-in-the-blanks. For example, if a user’s birth date is missing but you have a national ID number, you can often derive or infer the missing piece. While you wouldn’t guess an individual’s age in a system like a government database, you can make statistical assumptions for analytical or AI modelling needs. If a small handful of records lack an age, inserting the average age of your user base might be "good enough" for a high-level statistical analysis. The key is understanding the specific needs of the consuming system.

Before any compute fires up, you need a plan. First, think through the business objectives: What are you trying to achieve? Second, account for data coming from heterogeneous systems. The same concept might be represented in wildly different formats across sources, demanding rigorous normalization or unification.

The ML prerequisite

A critical, often-overlooked transformation is the preparation for AI models. A model doesn't want age in years; it often demands features scaled between 0 and 1. If your user ages range from 20 to 60, you must rescale that spectrum so that 20 maps to 0 and 60 maps to 1. This isn't complexity for its own sake; classic machine learning algorithms are simply built to perform optimally with standardized input. It's much simpler for the data scientist if the data engineering pipeline handles this preparation.

Finally, there’s validation, which is extremely important. Fail to validate, and you might miss a critical, corrupting data point. You could end up processing terabytes of data, incurring costs of thousands or tens of thousands of dollars, only to produce garbage and have to start the entire costly process over.

Data orchestration

When designing orchestration for data pipelines, the key tension is how much to automate versus where human oversight remains essential.

Full automation has a lot of appeal. Workflows trigger themselves, dependencies manage themselves, retries and error handling run in the background, and the system hums along without constant human intervention. Automated pipelines also reduce human error, free engineers from repetitive tasks, and scale beyond what manual systems can sustain.

Still, automation is only as good as the rules you build into it. When an upstream feed changes structure unexpectedly, or data drifts in unseen ways, automated systems may continue to push bad data downstream unchecked.

What I say frequently is that – when we compare automation to human work – while automation clearly wins by covering the larger part of the 80/20 rule, in some cases it can’t beat the “gut feeling” an expert may feel when looking at data. They may come with the notion that “This looks odd compared to the last 30 days,” or “This transformation result doesn’t make business sense.”

In my experience, orchestration should rely on a balancing act. On a high level, my recommendation would be to automate everything that’s routine, predictable, and high volume, and reserve human oversight for the high-risk, ambiguous, or exceptional cases.

So, automation should manage scheduling, retries, lineage, alerting, and standard validations. Humans should step in when anomalies, schema drift, or critical logic changes arise. That balance gives you both speed and trust.

Data governance

The big decision in governance is how to structure ownership: centralized, federated, or fully self-service.

A centralized approach places all responsibility within a single team. It’s strong on consistency and compliance but often creates bottlenecks and limits agility. A federated model distributes ownership across business domains while maintaining shared standards. It balances flexibility with oversight, though coordination can get complex.

Then there’s self-service governance, often described as data mesh. This pushes ownership directly to domain teams and treats data as a product. It can empower faster access and innovation, but it requires cultural maturity and standardized policies. It also relies on “proper” infrastructure, where data producers and consumers can work together seamlessly – creating, sharing, and using data products without friction.

Getting the governance choice right – my perspective as Head of Engineering

At STX Next, we recommend focusing on automating governance rather than making decisions that could add layers of bureaucracy. Governance should happen by design, not by committee.

Whether this works depends heavily on context. Governance delivers the most value when organizations already have clear data problems and realistic timelines. It’s essential for companies with data-dependent processes, strict regulatory requirements, or active AI projects that demand clean, traceable data. But it won’t fix weak architecture, and it won’t succeed without dedicated stewardship or leadership buy-in.

The payoff is real, but varies by industry. In mixed cloud environments, every new platform can add weeks of integration complexity. Financial services often move faster because of regulatory pressure, while manufacturing takes longer due to operational complexity. Yet, to give you a sense of what’s attainable, 78% of our clients see positive ROI within 12 months.

The good news is, quick wins usually appear within the first 30-45 days, and they’re a result of better data quality monitoring.

By 60-90 days, teams see measurable impact in faster reporting and compliance readiness. The deeper, strategic value – like enabling AI projects and improving decision-making – merges over 6-12 months as governance matures.

Data security

Data governance and security shift fundamentally based on where your data lives. This choice between on-premise and cloud-native dictates your team’s approach to risk, compliance, and flexibility.

Choosing an on-premise model makes you the sovereign. By keeping everything in-house, you retain full control over infrastructure and data residency. This makes it simpler to meet extremely strict regulations.

Unfortunately, there is a catch – the cost, which is immense. This approach demands heavy upkeep and severely limits scalability. You own the hardware, the patching, and the monitoring; the operational burden and capital expenditure are high.

In the cloud-native world, compliance is shared. You use the provider’s sophisticated toolset for encryption and automated policy checks. This architecture is built for scale, which makes it simple to meet global standards and rapidly adjust resources.

The shared responsibility model is the cloud's biggest caveat. The vendor locks down the underlying infrastructure, but your team secures your data in the cloud. That means managing access, permissions, and configuration. Without rigorous governance, those configuration gaps immediately become security holes. You gain superior flexibility and lower initial costs, but you trade that for the necessity of constant vigilance over a dynamic security boundary.

You don’t have to make your data engineering choices alone

Whenever I talk about the data engineering lifecycle, I always underline that every single stage matters. Ingestion captures the right signals, storage affects speed and cost, and orchestration keeps things running. Once data’s transformed, it becomes insights for the business. And doing all this with a governance-first mindset builds trust for all stakeholders.

I’ve seen pipelines that technically “work” but barely move the needle. That’s usually because the bigger lifecycle picture is missing. Getting this entire setup right gives you faster access to insights, lower costs, and AI-ready data. Your teams then have an environment that lets them focus on impact instead of constantly trying to pin down errors in the pipeline.

If that’s your current struggle – or you’re overwhelmed by all the choices in building your data engineering strategy – reach out. At STX Next, we’ve been building data systems for 20+ years. We don’t rely on policy documents; governance is built straight into your pipelines, making your data reliable, scalable, and actionable. Check out our data engineering services if you’re looking for a partner who’ll turn your data lifecycle into a business advantage.